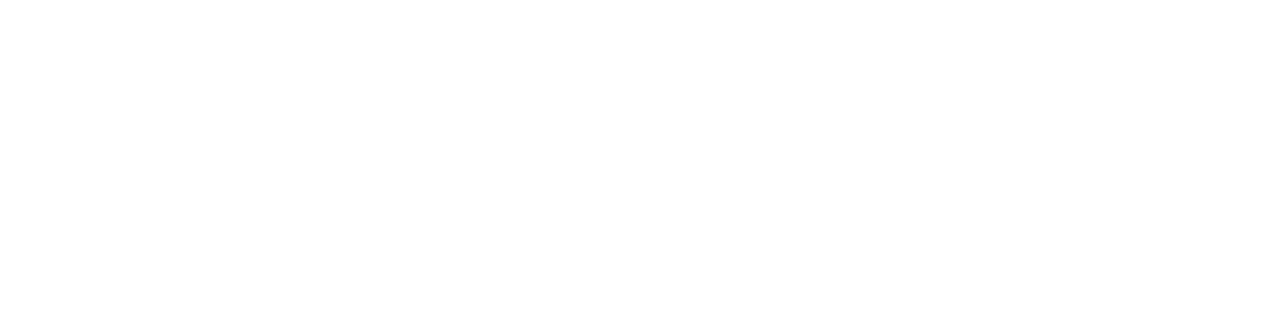

Overview

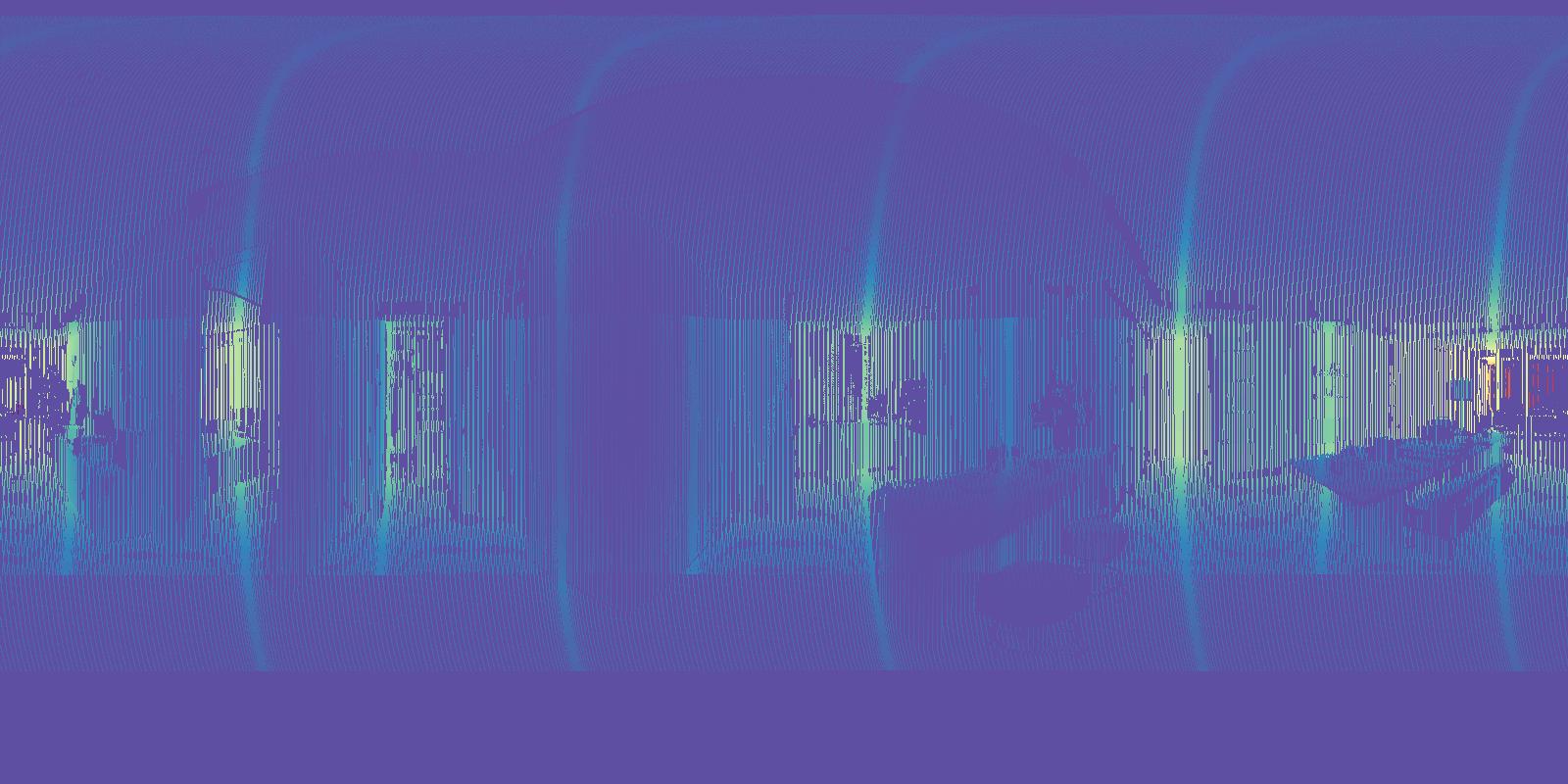

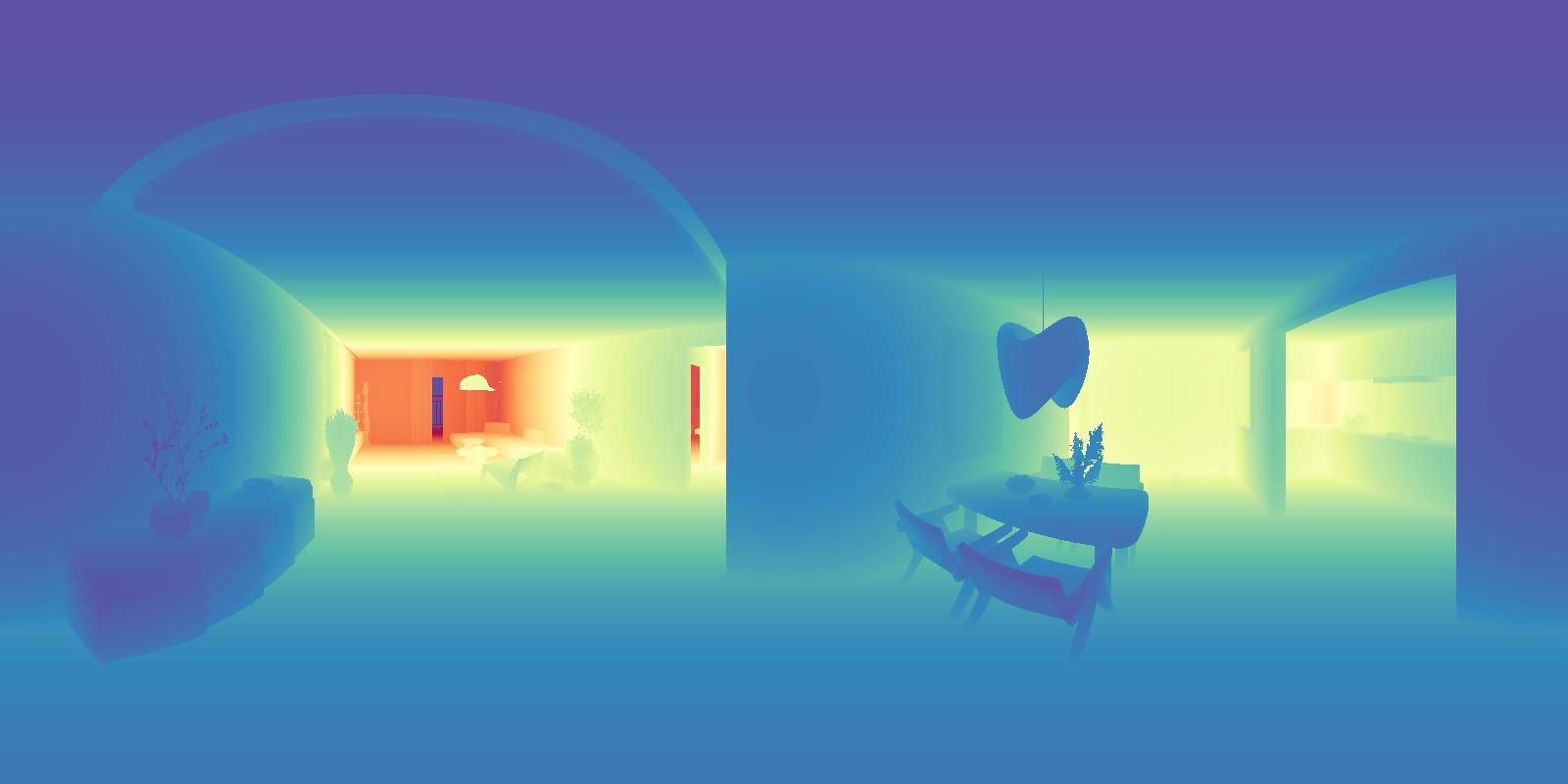

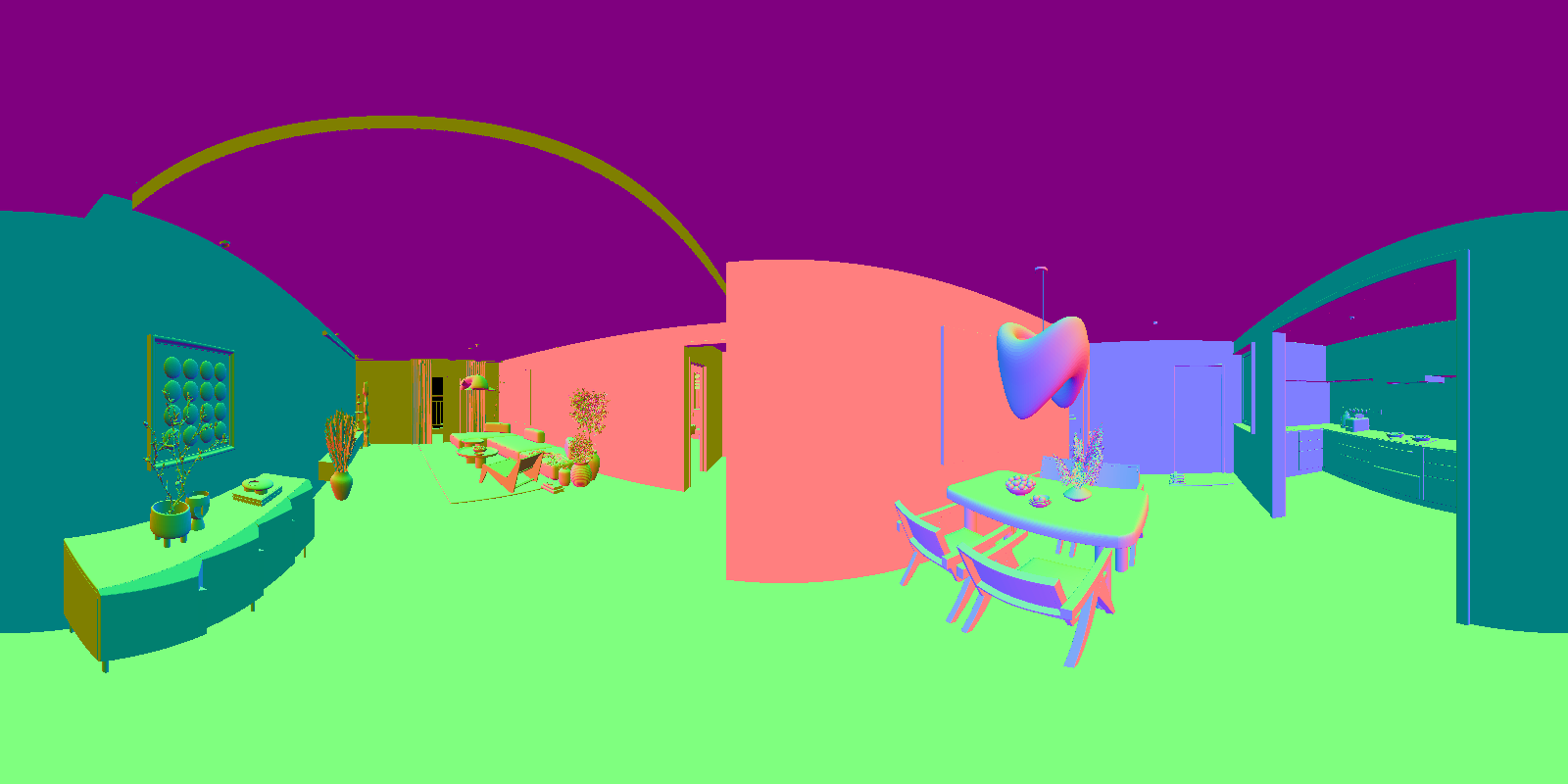

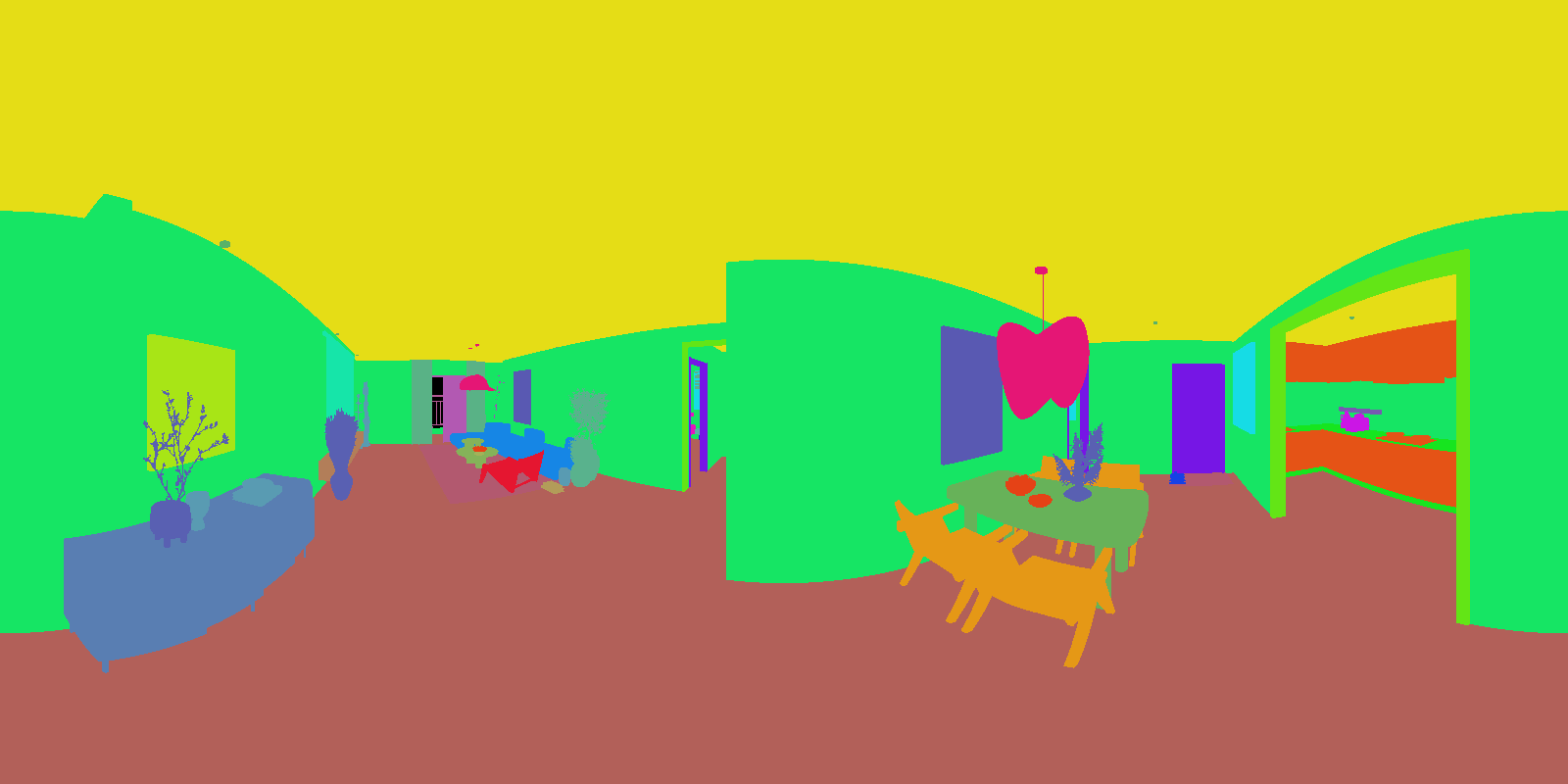

10,000 ScenesRealsee3D is a large-scale multi-view RGB-D dataset designed to advance research in indoor 3D perception, reconstruction, and scene understanding. It contains 10,000 complete indoor scenes, composed of:

Realsee3D aims to serve as a unified benchmark for high-fidelity indoor scene modeling, facilitating research in geometry reconstruction, multimodal learning, and embodied AI.

Beyond RGB-D imagery, the dataset provides comprehensive annotations, including CAD drawings, floorplans, semantic segmentation labels, and 3D object detection information.